Claude Code fell off a cliff these last few weeks. Anyone actually using it felt the drop: dumber edits, lost context, contradictions, the works. No, we weren’t imagining it.

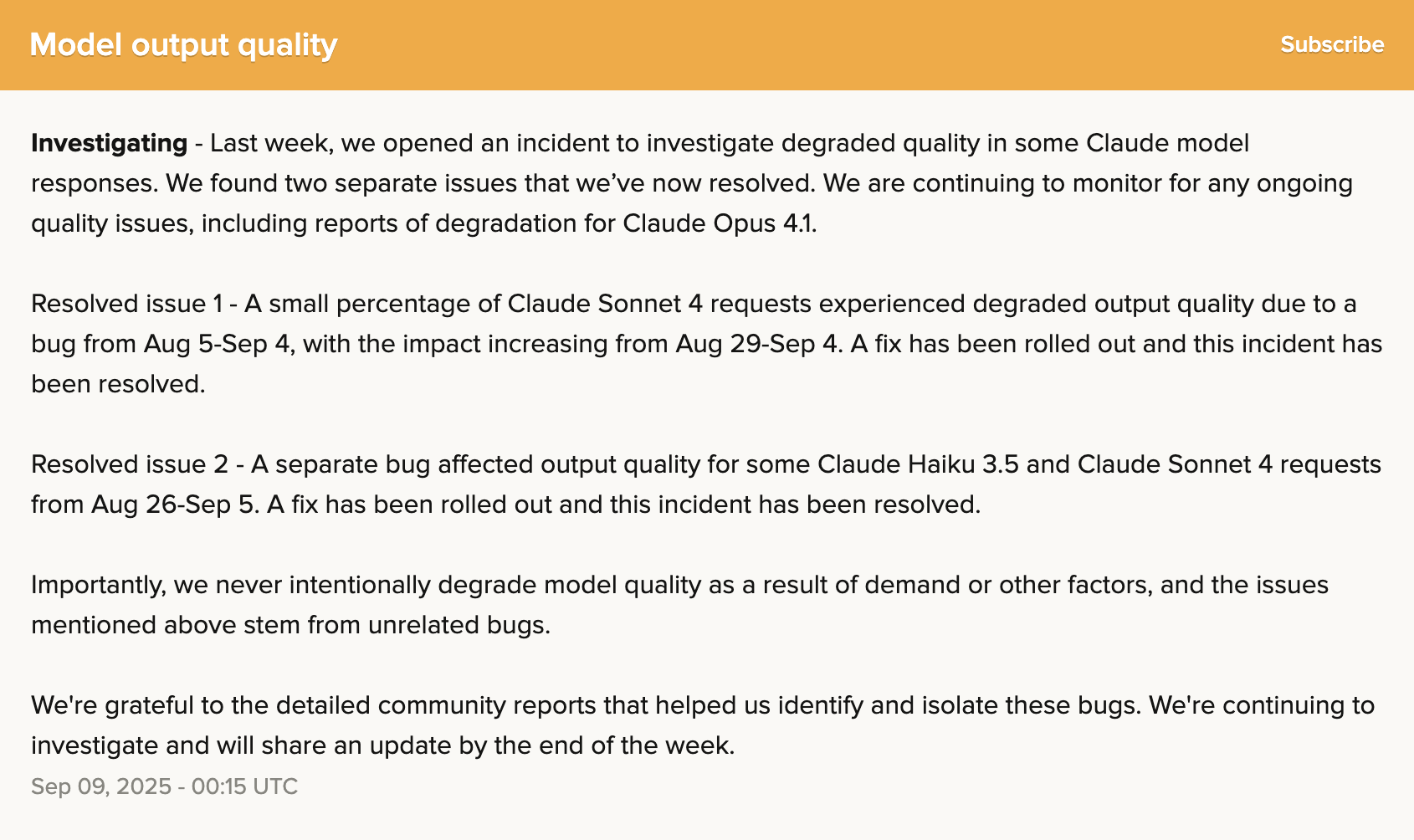

Well, Anthropic has finally spoken and said what many of us already knew weeks ago. From their incident post on September 8:

Investigating - Last week, we opened an incident to investigate degraded quality in some Claude model responses. We found two separate issues that we’ve now resolved. We are continuing to monitor for any ongoing quality issues, including reports of degradation for Claude Opus 4.1.

Resolved issue 1 - A small percentage of Claude Sonnet 4 requests experienced degraded output quality due to a bug from Aug 5-Sep 4, with the impact increasing from Aug 29-Sep 4. A fix has been rolled out and this incident has been resolved.

Resolved issue 2 - A separate bug affected output quality for some Claude Haiku 3.5 and Claude Sonnet 4 requests from Aug 26-Sep 5. A fix has been rolled out and this incident has been resolved.

Importantly, we never intentionally degrade model quality as a result of demand or other factors, and the issues mentioned above stem from unrelated bugs.

We’re grateful to the detailed community reports that helped us identify and isolate these bugs. We’re continuing to investigate and will share an update by the end of the week.

Good. Now let’s talk about the part they skipped: the silence.

Silence, Then PR

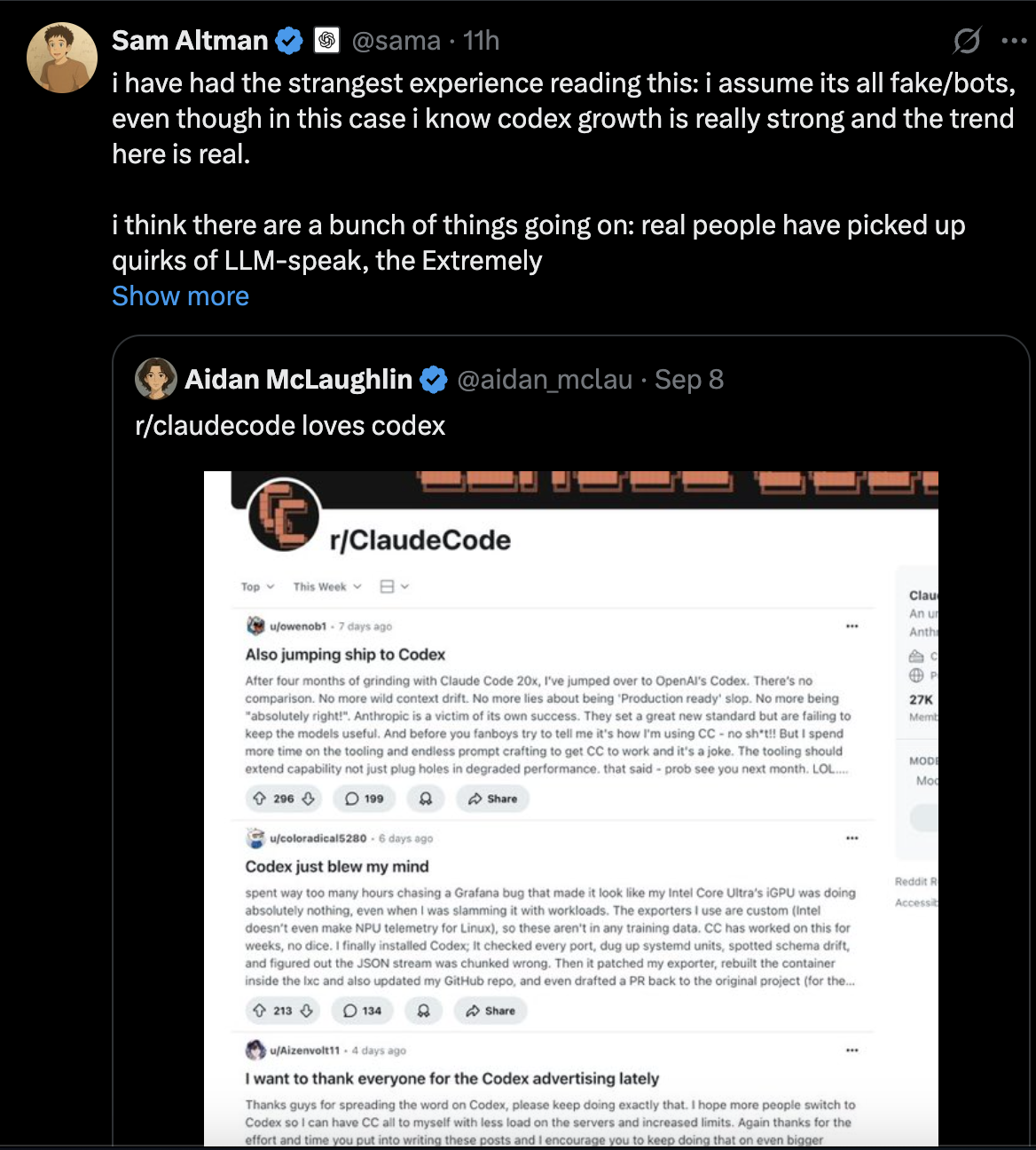

Anthropic were radio silent while their own subreddits filled with “I’m cancelling” threads. /r/ClaudeCode was a daily feed of broken behaviour and people jumping ship to Codex CLI. The company said nothing.

And then Sam Altman quote‑tweeted Aidn McLaughlin’s screenshot of that subreddit. Not before. After. Suddenly we get an incident post. Draw your own conclusions, but this looks reactive, not responsible.

Look At The Dates

- Aug 5 → Sep 4 (worse from Aug 29 → Sep 4)

- Aug 26 → Sep 5

That’s not a small brief bug; that’s weeks of degraded output. While many of us were paying $200/month for “Max.”

Anthropic says they “never intentionally degrade model quality.” Maybe. Users don’t experience intent; we experience results. Quality dropped. Communication dropped to zero. Only after a public shaming did we get the tidy “two bugs, resolved” line.

And what about Claude Opus 4.1? The one they say is unaffected? It’s been awful too. I had a 7,000‑word document where it lost all context after 2,000 words. It hallucinated wildly. It contradicted itself. It made basic mistakes. It was unusable. Opus 4.1 is meant to be the flagship, the best. It hasn’t been.

This all overlaps the August 28 rate‑limit crackdown “to curb abuse” of Claude Code. If the limits had nothing to do with the quality drop, prove it. Publish the change logs, the routing percentages, the exact rollout times. Without transparency, it just looks shady.

To me it appears Anthropic rushed out the rate limits, then scrambled to patch the quality issues that followed. Or they intentionally degraded quality to manage load, then lied about it. Either way, it’s a mess.

For a company valued in the hundreds of billions, this is amateur hour. You don’t charge $200 a month, ship mystery changes, and disappear for weeks. Zero tolerance.

Minimum Acceptable Now

- A real post‑mortem. Exact causes, flags, versions, fix timestamps.

- Credits or refunds for the period of degradation. Don’t make people beg support.

- Public quality dashboard for coding evals. Let the graph speak.

- Engage in the subreddits. Engineers, timestamps. No more silence.

I love Claude Code. Its been the GOAT for such a long time and I was a happy Claude Code user. Lately it felt like arguing with a distracted child. Fix the models, sure, but fix the culture more. Stop shipping in the dark. Earn the trust you just burned.